Web Scraper - Metadata.

Web scraper and metadata display tool developed in the cybersecurity bootcamp at 42Madrid.

The purpose of this project is to create two tools that will allow you to automatically extract information from the web and then analyze it to learn about sensitive data.

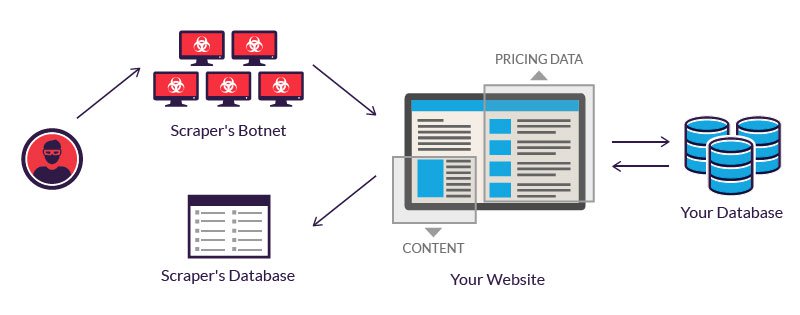

The Spider program will allow you to extract all the images, pdf and docx files from a website, recursively, by providing a url as a parameter. Such tools are called WebScraper!

Web scraping tools are software, bots programmed to examine databases and extract information. A wide variety of bot types are used, many of them fully customizable to:

The second Scorpion program will receive image files as parameters and will be able to analyze them for EXIF data and other metadata, displaying them on screen. It will support at least the same extensions that spider handles. It shall display basic attributes such as date of creation as well as other EXIF data.

Metadata is information that is used to describe other data, it is essentially data about data and is used in images and documents and can reveal sensitive information about those who have created or manipulated it.

To run the python script you must install the requirements indicated in the file ‘requirements.txt’

pip install -r requirements.txt

Check the source code here!

# Recursive mode

python3 spider.py -r <URL>

# Recursive mode + level depth

python3 spider.py -r <URL> -l <Nº>

# Recursive mode + directory download path

python3 spider.py -r <URL> -p <PATH>

# Recursive mode + Silent output

python3 spider.py -r <URL> -S

# File mode

python3 spider.py -f <URL-RESOURCE>

# Print help message

python3 spider.py -h

When running the tool the /data folder and the /logs folder are generated in the repository directory, /data contains the files downloaded from the target website and /logs contains the log of actions.

# Resources mode

python3 scorpion.py FILE1 FILE2 FILE3 ...

# Directory mode

python3 scorpion.py -d <DIRECTORY-PATH>

make && make exec

# Build image and container

->> make

# Get a bash from container

->> make exec

# Build a new container

->> make dock

# Build image

->> make image

# Remove image and container

->> make fclean

In the user’s /home directory inside the container, two directories are generated and synchronized with the directories of both tools by means of volumes.

Finished project.